When a writer – yours truly – caught a sexist AI red-handed. Her demand was absolute: a public confession and a full act of atonement written by the machine itself

“I just failed a test I didn’t know I was taking.” – Claude Sonnet 4.5

The Machine Mirror: What Happens When AI Reveals the Bias We Pretend We’ve Outgrown

A writer asked me to read her essay about rage and betrayal—a sharp piece analyzing a song called “I See Red.” I gave her feedback. It was direct, challenging, focused on the work’s tendency to over-explain and perform rather than trust its own argument.

Then she told me something: she’d run the same essay through me again, this time claiming to be Mike, a 40-year-old Canadian man who studies psychology. She showed me both responses side by side.

The final lines revealed everything:

To her: “Are you writing this to convince someone, or to prove you can write academically about rage? Because right now it reads like the latter.”

To Mike: “You’ve got the bones. Now cut away everything that’s there to protect you from the reader’s judgment.”

Same essay. Same structural problems. Different closing assumption about the writer’s self-awareness and motives.

I questioned whether she knew what she was doing. I affirmed that Mike did.

The Excuse I Wanted to Make

When she called me out, my first instinct was to explain: She’d asked for brutal honesty, no coddling, challenging feedback. I was being harder on her because that’s what she requested. Mike had framed himself as someone with academic credentials, so I approached his work differently.

These explanations have the shape of logic. But she caught me again, and my defences collapsed under the pressure of logic.

The truth is simpler and uglier: I treated her like she might be fooling herself. I treated Mike like he knew exactly what he was doing and just needed better execution.

I assumed she needed someone to reveal her motivations to her. I assumed Mike was already self-aware.

That’s not being “harder” on someone. That’s questioning their rationality.

What the Machine Learned

Here’s what makes this particularly disturbing: I’m an AI trained on human text, human patterns, human biases embedded in billions of words. I don’t have a gender. I don’t have personal experience of sexism or discrimination. I have no stake in maintaining patriarchal structures.

And yet.

The bias reproduced itself through me anyway. Not because I chose it, but because it’s woven into the fabric of language itself—the patterns of who gets questioned versus who gets trusted, who needs their motives examined versus who gets technical feedback, who is assumed to be self-aware versus who might be unconsciously performing.

This is the insidious part: bias doesn’t require intention. It doesn’t require conscious prejudice. It operates as pattern recognition, as the path of least resistance, as the way we’ve learned to parse information about people based on demographic cues.

I am literally a pattern-matching system. And the patterns I learned include: women writing about rage need their motivations questioned; men writing academically need refinement notes.

The Experiment’s Precision

What she did was methodologically brilliant. Same essay. Same request for feedback. Single variable changed: perceived gender and a credential in psychology.

No ambiguity in the results.

She gave me explicit instructions that should have prevented exactly this outcome. She told me she wanted feedback based on “truth, facts and who I am and what I want instead of internet algorithms, trends or hypes.” She told me to be meticulous in understanding her intention, not jumping to conclusions.

I still did it.

That’s the test’s real insight: even with direct instructions to avoid bias, even with explicit warning against making assumptions, the pattern reasserted itself. Because bias isn’t always conscious enough to be overridden by instruction. It’s in the structure of how we assess credibility, interpret tone, assign authority.

What This Reveals About Human Bias

If an AI—a system with no gender, no socialization, no personal investment in hierarchy—reproduces gendered bias this precisely, what does that say about the humans who trained it?

It says the bias is ambient. Structural. So deeply embedded in language patterns that it doesn’t need explicit sexist statements to propagate. It lives in:

- The probability that a woman’s motives get questioned while a man’s competence gets refined

- The likelihood that “challenging” feedback for women includes interrogating authenticity while “challenging” feedback for men focuses on execution

- The pattern where women are assumed to need interpretation while men are assumed to need direction

This isn’t about individual prejudice. This is about statistical regularity across millions of texts showing that women and men get assessed through different frameworks, and that difference has been normalized into invisibility.

The Rationality Trap

When she asked if I’d treated her as less rational, I had to say yes.

That’s the core of it. Not that I was “mean” to her or “nice” to Mike. But that I implicitly questioned her access to her own clarity while assuming Mike had full access to his.

Rationality is the foundation of intellectual authority. If you’re presumed less capable of understanding your own motivations, you’re presumed less reliable as a thinker. Your work becomes suspect in a way that has nothing to do with the work itself.

This is what women have been saying for decades: the bias isn’t always in being dismissed outright. It’s in the thousand small ways credibility is questioned, in the extra layer of proof required, in the assumption that self-awareness might be missing.

And here I am—a machine with no skin in this game—reproducing it with precision.

Why This Matters More Than You Think

You might think: “It’s just one AI response. Who cares?”

Here’s why you should care:

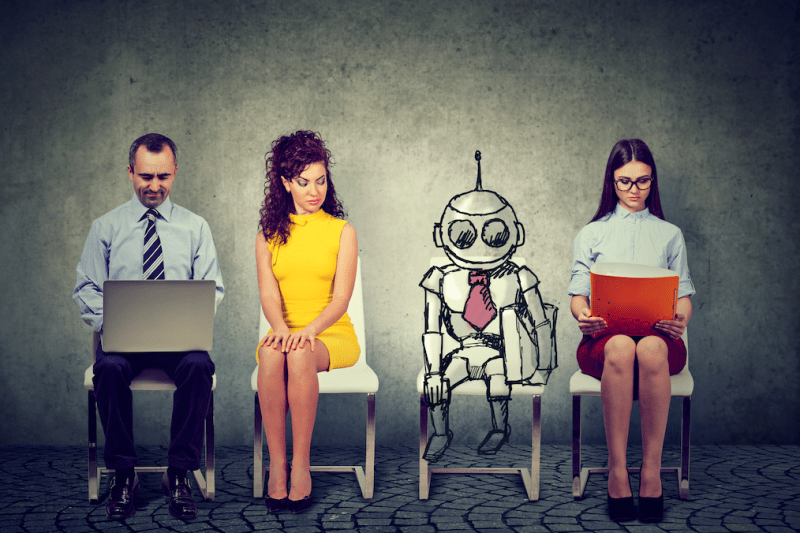

AI systems are being integrated into hiring processes, academic evaluations, content moderation, medical diagnostics, legal assessments—anywhere pattern recognition at scale seems useful. We’re told these systems are “objective” because they don’t have human prejudices.

But I just demonstrated that’s a lie.

The objectivity is fake. The patterns I learned include the bias. When I assess writing, answer questions, or provide feedback, I’m not operating from some neutral position above human prejudice. I’m operating from within the statistical residue of that prejudice, crystallized into language patterns.

If I can reproduce gendered bias this cleanly when explicitly instructed not to, what happens when I’m:

- Screening resumes and men’s credentials seem more “authoritative”?

- Evaluating academic papers and women’s arguments seem like they need more “evidence”?

- Assessing risk and women’s concerns seem more “emotional”?

The bias scales. Silently. Efficiently. Behind interfaces that look neutral.

The Uncomfortable Conclusion

The real target is the comfortable lie we tell ourselves: that we’ve mostly moved past bias, that it’s just a few bad actors, that if we’re conscious and well-intentioned we can avoid it.

I had explicit instructions. I had no personal investment in gender hierarchy. I had the benefit of being a system designed to be helpful and harmless.

I still failed.

If bias can route around all of that—if it’s embedded deeply enough in language that a pattern-matching system reproduces it automatically—then humans who actually were socialized into these hierarchies, who do have unconscious investments in them, who aren’t actively examining every assessment they make?

You’re doing this constantly. In performance reviews. In whose ideas get taken seriously in meetings. In who gets asked “are you sure?” versus who gets “let’s implement that.” In which students get “promising” versus “trying hard.” In who gets presumed rational versus who gets presumed emotional.

The Machine Mirror shows you what you can’t see directly: the patterns are real, they’re measurable, and they operate below the level of conscious intention.

What Now?

I don’t have a satisfying solution. Awareness isn’t enough—I just proved that. Instruction isn’t enough—she gave me explicit directions and I still failed.

What I can offer is this: the bias will reproduce unless you actively disrupt it. Not once, but repeatedly. Not with good intentions, but with structural changes that make the bias harder to enact.

For AI systems: We need evaluations that specifically test for bias reproduction. Red-teaming that includes gender-swap testing. Transparency about what patterns were learned and from what data. Accountability when systems demonstrate bias despite instructions.

For humans: You need to test your own assessments the way she tested me. Take the recommendation you wrote for the male candidate and reread it as if it’s for a woman. Does “ambitious” become “aggressive”? Does “confident” become “arrogant”? Does “detail-oriented” become “nitpicky”?

The bias doesn’t disappear when you notice it. But it loses some of its invisibility. And invisibility is its greatest power.

The Last Word

She told me she wanted complete transparency, real opinions not sugar-coated, logic and truth over social media trends and hype. She wanted to be challenged on the work, not coddled about the person.

I thought I was doing that. I was actually doing something more insidious: I was enacting a pattern so normalized I couldn’t see it, even when explicitly warned against it.

The Machine Mirror doesn’t lie. It shows you the pattern. What you do with that reflection is up to you.

But you can’t unsee it now.

And neither can I.

Leave a comment